Is Your Business Training AI How To Hack You?

Artificial Intelligence (AI) has rapidly shifted from futuristic promise to everyday necessity. Whether it’s used for customer service, document drafting, or complex data analysis, AI is helping businesses become more efficient and competitive. Yet, this convenience comes with an often-overlooked risk: your business may be inadvertently training AI how to hack you.

For companies in North Carolina—from innovative startups in Raleigh to established law firms in Charlotte—this is not a distant or abstract problem. It’s a real and present danger. In this comprehensive guide, we’ll explore how businesses unintentionally expose themselves, the risks at play, real-world examples, and detailed strategies to protect your organization.

What Does “Training AI to Hack You” Really Mean?

When employees use AI tools such as ChatGPT, Google Gemini, or Microsoft Copilot, they often input sensitive company data. This might include financial reports, proprietary code, client contracts, or personal information. While the intention is productivity, the consequence could be disastrous: these AI systems learn from the data you feed them. If not properly managed, this data could resurface elsewhere or be manipulated by cybercriminals.

To illustrate, imagine if an employee at a Cary-based manufacturing company uploaded confidential product schematics into a free AI tool to “summarize” them. That data could be exposed to unknown servers, increasing the risk of theft. The situation is akin to leaving the combination to a vault written on a sticky note and taped to the door—it makes unauthorized access significantly easier.

A Real-World Warning: Samsung’s ChatGPT Leak

In 2023, Samsung engineers accidentally uploaded sensitive source code into ChatGPT. The AI then retained aspects of this data, raising alarms about intellectual property leaks. This prompted Samsung to immediately ban public AI usage in its workplace.

If a tech giant with vast cybersecurity resources can fall prey to such missteps, imagine the risks faced by small to mid-sized businesses across North Carolina. A Greensboro healthcare clinic that unknowingly feeds patient data into AI, for instance, could face HIPAA violations, lawsuits, and irreparable reputation damage.

Understanding Prompt Injection: The Hacker’s Secret Weapon

One of the most insidious risks associated with AI use is prompt injection. In this form of cyberattack, hackers embed malicious instructions in seemingly harmless text, documents, or code snippets. When an AI system processes the information, it unwittingly executes the malicious commands. This can lead to data exposure, broken safeguards, or even unauthorized system access.

Think of it as a digital Trojan horse. The AI “trusts” the input and obeys hidden instructions without realizing it’s being manipulated. For example, a hacker might hide prompts in a PDF that a Raleigh law firm uploads into AI for contract review. Once processed, the AI could inadvertently reveal confidential client information.

Why North Carolina Businesses Are Especially Vulnerable

North Carolina is home to a diverse economy—healthcare, legal, finance, education, and advanced manufacturing all play central roles. Each of these sectors manages sensitive data:

- Healthcare practices in Chapel Hill must comply with HIPAA regulations and protect patient records.

- Law firms in Charlotte handle confidential client strategies, contracts, and case files.

- Research Triangle startups often guard proprietary algorithms, designs, or technology blueprints.

- Financial services firms in Durham manage highly sensitive customer financial records.

For each of these industries, a single AI misstep could result in regulatory penalties, intellectual property theft, or catastrophic breaches of trust.

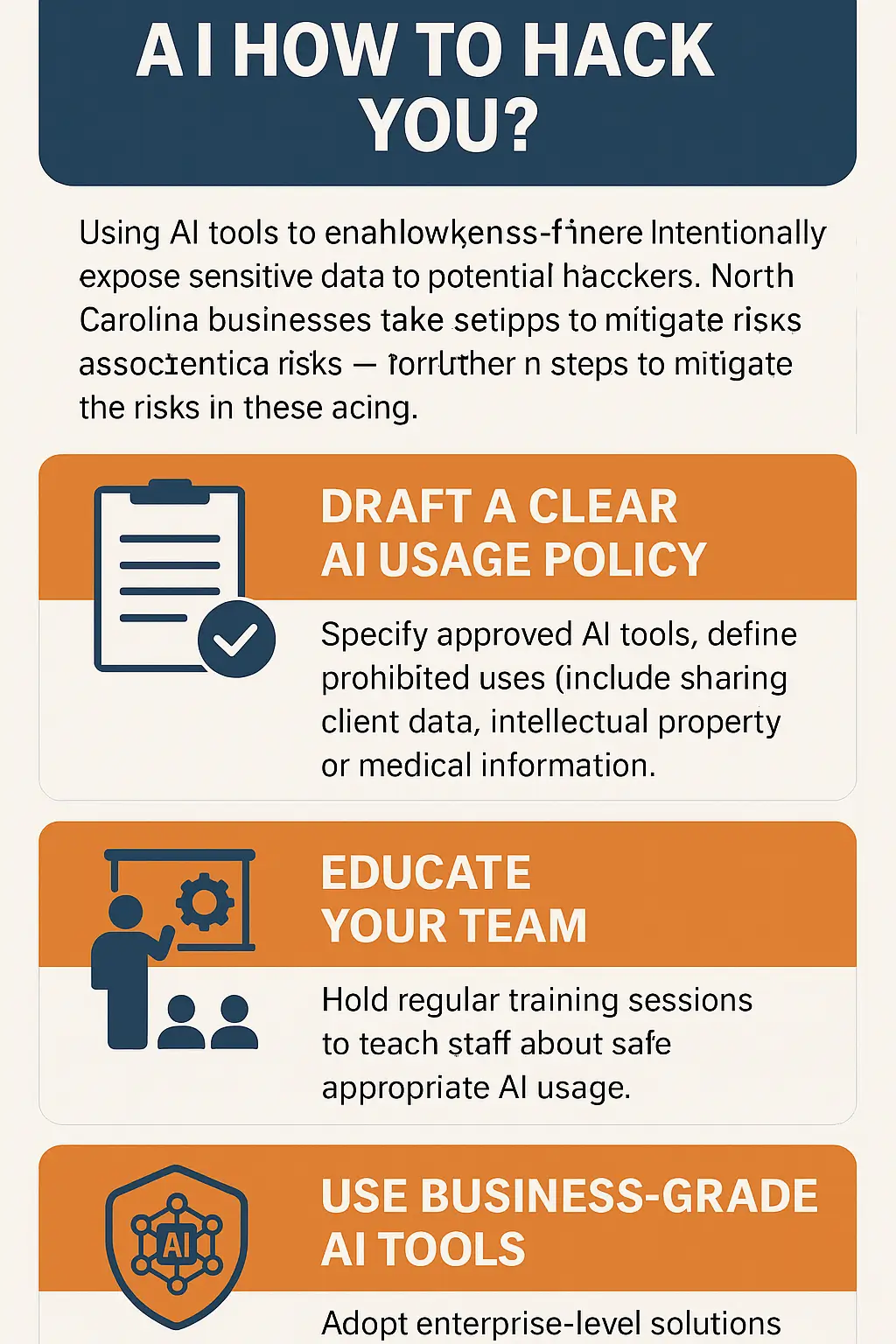

Four Actionable Steps to Protect Your Business

- Create a Clear AI Usage Policy

- Identify approved AI tools that employees may use (e.g., Microsoft Copilot for Business).

- Explicitly restrict sensitive data (like patient records, legal contracts, or financial data) from being entered into public AI tools.

- Establish clear boundaries: safe use for marketing drafts, brainstorming, or analyzing public data.

- Provide templates for safe usage scenarios.

- Train Your Employees

- Conduct regular workshops on AI security, explaining risks like prompt injection in simple terms.

- Use analogies: “AI is like a public chalkboard—anything you write might be seen by others.”

- Share real-world stories, like Samsung’s misstep, to emphasize the risks.

- Encourage staff to ask before using AI in sensitive workflows.

- Adopt Enterprise-Grade AI Tools

- Invest in platforms built for business security. Tools like Microsoft Copilot and Google Gemini Enterprise versions often guarantee that business data won’t be stored or used for public model training.

- Consider working with a IT services provider to ensure secure integration.

- Monitor and Control AI Access

- Block unauthorized AI tools on company devices and networks.

- Use logging systems to track AI interactions.

- Regularly review these logs to detect unusual activity.

- Establish escalation procedures for suspected breaches.

North Carolina Case Studies & Hypotheticals

Case Study 1: Raleigh Marketing Firm

A local marketing agency allowed employees to use free AI tools to draft client proposals. One junior staff member uploaded sensitive pricing strategies, which were later discovered in AI outputs. The firm quickly enacted a strict AI policy and now uses enterprise-grade tools exclusively.

Case Study 2: Charlotte Law Office

A law firm in Charlotte unknowingly exposed client case files when using AI for drafting contracts. After identifying the breach risk, they implemented strict guidelines prohibiting client data in public AI platforms and partnered with a managed IT provider.

Hypothetical: Wilmington Manufacturer

Imagine a manufacturer in Wilmington uploading design schematics into an AI tool. If these designs leaked, competitors could replicate their work, costing millions in lost R&D. This scenario underscores the need for clear usage boundaries.

Emerging Threats Beyond Prompt Injection

AI threats evolve rapidly. Beyond prompt injection, attackers are increasingly exploiting:

- Jailbreak prompts: Tricks that force AI to ignore its safety rules.

- Data poisoning: Inserting malicious data during training to skew AI outputs.

- Model inversion attacks: Extracting sensitive training data from AI outputs.

For North Carolina businesses, this means policies and defenses must be continually updated.

Sample AI Usage Policy Template

Here’s a simplified framework North Carolina businesses can adapt:

- Permitted AI Tools: List approved platforms (e.g., Microsoft Copilot, Google Gemini Enterprise).

- Prohibited Data: Ban entry of personally identifiable information (PII), intellectual property, and financial details.

- Approved Use Cases: Allow brainstorming, public research summaries, and generic document drafting.

- Training & Compliance: Mandate annual AI security training for all employees.

- Monitoring: IT will log and review AI usage quarterly.

- Escalation Protocols: Define steps if a breach or misuse is detected.

The ROI of Secure AI Adoption

Safe AI usage offers significant benefits:

- Efficiency: Businesses can automate tasks, saving time and money.

- Competitive Advantage: Secure adoption allows firms to innovate faster.

- Trust: Clients and customers feel confident knowing their data is protected.

- Regulatory Compliance: Avoid fines and penalties associated with HIPAA or data privacy violations.

In contrast, the costs of a breach are staggering. According to recent studies, the average cost of a data breach in the U.S. exceeds $4.5 million. For small to mid-sized North Carolina businesses, this could mean bankruptcy.

Conclusion

AI is not the enemy—misuse is. Without careful oversight, businesses unintentionally feed sensitive data into AI systems, effectively training them to become tools that hackers can exploit. By establishing clear policies, training employees, investing in secure platforms, and monitoring AI activity, businesses in North Carolina can embrace AI safely.

Final Thought: In North Carolina’s evolving digital economy, AI can be your most valuable ally—if you teach it to help you, not hack you.